Instagram Reels exposing kids to ‘overtly sexual content’ next to Disney ads: report

Wall Street Journal test finds 'jarring doses of salacious content'

A new report suggests Instagram is serving up “risqué footage” of children and “overtly sexual adult videos” to younger users.

In an experiment to determine what ads the Meta-owned platform’s algorithm would recommend to fake accounts which followed “only young gymnasts, cheerleaders and other teen and preteen influencers,” The Wall Street Journal found Instagram “served jarring doses of salacious content to those test accounts.”

The fake accounts focused on Instagram’s short video Reels service and were set up after “observing that the thousands of followers of such young people’s accounts often include large numbers of adult men, and that many of the accounts who followed those children also had demonstrated interest in sex content related to both children and adults,” according to the Journal.

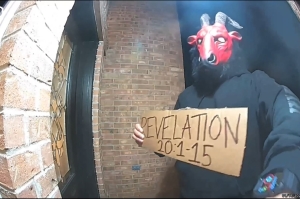

One series of recommended Reels included an ad for the Bumble dating app couched between a video of “someone stroking the face of a life-size latex doll” and a “video of a young girl with a digitally obscured face lifting up her shirt to expose her midriff,” the report said.

Another stream of videos played a Pizza Hut commercial followed by a video of a man lying in bed next to “what the caption said was a 10-year-old girl.”

Other videos appearing in the test included an “adult content creator” uncrossing her legs to “reveal her underwear”: a child wearing a bathing suit as she records herself while posing in front of a mirror; another “adult content creator” giving a “come-hither motion;” and a girl seductively dancing in a car to a “song with sexual lyrics.”

In addition to the questionable content, the Journal said ads which “appeared regularly” in the test accounts included ads for dating apps, livestream platforms with “adult nudity,” massage parlors, and AI chatbots “built for cybersex.”

“Meta’s rules are supposed to prohibit such ads,” the report added.

In response to the experiment, a Meta spokesperson told the Journal the “tests produced a manufactured experience that doesn’t represent what billions of users see” and declined to comment on “why the algorithms compiled streams of separate videos showing children, sex and advertisements.”

Some advertisers like Disney and Bumble said they had already taken steps to address the issue with Meta, while others, like Walmart and Pizza Hut, declined to comment.

The report comes after another investigation by the Journal and researchers at Stanford University and the University of Massachusetts Amherst found that Instagram’s services cater to people who seek to view illicit materials by connecting them to sellers of such content through its recommendation system.

Researchers behind the investigation discovered that the social media website allowed users to search for certain hashtags which then connected them to accounts advertising child-sex material.

Last September, Instagram announced the platform’s decision to suspend Pornhub after a nonprofit group shared its concerns about children's vulnerability to human trafficking and the presence of Pornhub on Instagram.